The digital era is followed by a huge data trail. And this data that is generated is penetrating nearly every aspect of our lives. Some might say that it has even spawned a new evolution of the human being: the Digital Data Junkie.

Long gone are the days we’d be satisfied with taking one roll of film on a daytrip, or just print and archive those 1 or 2 really important documents. Digitization is empowering a new reality, one that allows us to take hundreds of photos a day and save each and every document to the cloud, without a second thought.

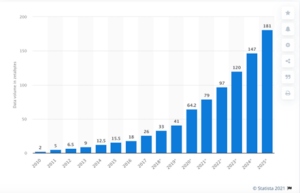

The numbers tell a story of exponential growth. In 2010 for example, the entire world created, captured, copied and consumed just 2 zettabytes of data. By the end of this year, that number is expected to reach 79 zettabytes, and shows no indications of slowing down anytime soon.

Source: Statista.com

This insatiable demand for data has created a lucrative market; one that Big Tech companies have already taken over. Companies like Facebook, Amazon, Google and Apple are capitalizing on our addiction to data. They’re the ones running the data centers and public clouds that facilitate the collecting, processing, analyzing and storing of our data.; the critical data infrastructure that’s powering the data revolution.

And if you think our addiction to data is confined to Earth, you’re in for a surprise. Data has been accumulating in Space for years. As this trend accelerates, now is the perfect time to take a look at how data is currently handled in Space, and discuss how it will be handled in the future.

Data in Space: How’d that Happen?

Over the last few years cheaper launch technology has finally made viable the commercialization of the final frontier. Commercial imaging and earth observation companies are the first to capitalize on this brave, new world; what we call the New Space. For some years now they’ve been launching remote-sensing satellites that support continuous observation for verticals such as weather forecasting, military observation, telecommunication, agriculture and more. The data they capture is used in multiple, varied ways, including monitoring crop yield, identifying flood-prone areas, customer-behavior research, intelligence-gathering, traffic pattern analysis, to name just a few.

The bottom line is that these commercial endeavors are creating huge amounts of data. More specifically 100TB of data on average a day, every single day of the year.

Transforming Data from Space into Valuable Insights

Extracting the valuable insights locked within the data generated in Space requires that it be analyzed, processed and stored, just like any other data. However, the current, standard processes for doing this leave much room for improvement. Basically, existing practices dictate that data should be generated in Space and then transmitted to Earth, where infrastructure that supports advanced data computing tasks like storage, analysis and processing await.

With more and more private companies launching more and more satellites into Space, the amount of data generated in Space is growing leaps and bounds. This makes the above mentioned process of handling this data particularly problematic. It cannot support the generation of real-time, actionable insights and time-sensitive analysis, and it also gives way to the question of sustainability. How long can existing processes support the potentially infinite amount of data extracted from Space?

Space Edge Computing: The Great Enabler

Accessing real-time insights derived from data generated in Space can save lives and support more productive, effective Space missions. For example, real-time access could help astronauts make data-driven decisions such as finding the optimal location on the moon’s surface for experiments or quickly diagnosing a sick colleague. It could also empower earlier detection of environmental disasters on Earth, like oil spills and forest fires. Unfortunately, with the average time for sending a single temperature reading to the earth’s surface taking 5-20 minutes, real-time insights simply aren’t feasible with existing practices.

The good news is that advanced edge computing capabilities can potentially help us bring real-time insights to Space. Edge computing basically moves the processing and analyzing capabilities away from the data center and places it as close as possible to the actual data source.

But real-time analysis is just the tip of the iceberg in regards to the advantages that edge computing in Space can deliver. With edge computing, there’s no need to store, or even transmit to Earth, so much data, as much of it has already been processed and its insights extracted in Space. Imaging tasks are more successful when the data captured is immediately analyzed–that way, they can instantly verify that the imaging mission reached its objectives.

Data storage and downlink transmission are managed more efficiently when fewer images and less data needs to be transmitted. Complicated Space missions such as docking at Space stations are simplified when the complex calculations and interspace communication required to facilitate smooth navigation take place in Space.

Data Centers in Space, The Next Big Thing

All these benefits, and more, can be delivered by integrating edge computing into dedicated data centers. These data centers could potentially reside in satellites, or even on the moon. They’d be set up with software-defined hardware, enabling remote upgrades and remote data center management. Data centers are perhaps our best bet for unlocking a huge number of new capabilities that can significantly upgrade the benefits of commercial Space endeavors:

Servicing other satellites

The ability to process data in Space enables data center satellites to provide computing and storage services to other satellites. This will empower the creation of a “cloud-like” data network in Space so that observational and imaging satellites can offload data storage and computing to the data centers. This, in turn, will allow for the development of smaller, cheaper commercial satellites that won’t require built-in computing equipment.

Outsourcing pollution to Space

Power hungry, energy intensive computing tasks with huge carbon footprints, like crypto-currency mining for example, can be moved from earth to massive computing machines in Space to help reduce pollution levels.

The Challenges of Setting Up A Data Center in Space

The commercialization of Space has transformed it into a blue ocean market for data centers. New companies are entering the arena with a mission to deploy organized, commercial efforts that solve the issue of handling data in Space.

There are already a number of companies tapping into this growing industry. Companies and agencies like the SDA, LeoCloud, LyteLoop and others are integrating micro data centers into their satellites to handle Space data processing and provide services to other satellites. This growing trend is only just getting started, however there are several challenges that must be overcome before it can really accelerate.

- Access to Space. Deploying data centers in Space obviously requires the ability to get them into Space. The traditional, number 1 barrier of entry to this industry–launch costs–is getting cheaper, and companies are beginning to offer commercial services that meet this need.

- Energy supply. Data centers are heavy energy-consumption machines. New, innovative methods for continuously delivering sufficient levels of energy to the data center need to be developed. Solar energy, which is usually easier to produce in Space, is one potential solution.

- Temperature. Just like servers on earth require constant cooling and tight climate control, so do those in Space. Maintaining optimal temperature levels in the data center to prevent overheating is a critical challenge.

- Radiation. Space radiation is a significant hazard due to the much larger flux of high-energy galactic cosmic rays (GCRs). Data centers must be able to withstand the higher levels of radiation in Space.

- Computing and Storage. Earth-like computing and storage infrastructure that is resilient to the harsh conditions of Space needs to be developed, built and deployed. This infrastructure must be both robust enough to withstand Space conditions while delivering the processing power and other mission-critical features required for handling data in Space.This is precisely what we’re solving at Ramon.Space. Our Space-resilient super-computer systems are driven by AI/ML processors that enable the realization of earth-like computing capabilities in Space.

Boosting the evolution of the Space ecosystem

The near future will see an explosion of new Space-focused applications that we currently can’t even imagine. Think AI-powered autonomous moon rovers. Space data centers open up the possibility of entirely new ecosystems in Space powered by extraterrestrial computing and storage.

Summary

In our data-driven world, the ability to quickly move, process and store data is powering disruptive, positive change in every industry. With the commercialization of Space now a reality, existing data management processes that dictate the transmission of Space data to earth for handling must be improved. The latest edge computing technologies have the potential to transport the processing from Earth to Space; this begs the question: if we can handle it all locally in Space, why even consider any other way?

————————————

LEOcloud Signs Supercomputing Systems Partner Agreement with Ramon.Space